Network Latency

| Network Latency is the round-trip delay for data transmissions. Increased network latency can interrupt communication streams and leads to downtime. |

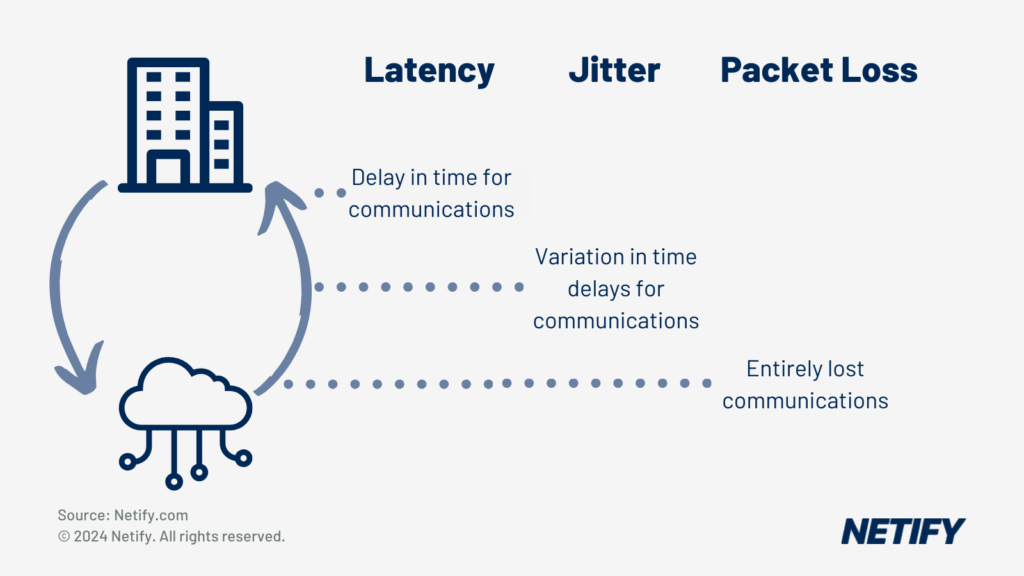

Network Latency refers to the round-trip time delay for data transmission between a source and destination within a network. This means that latency is measured from the point of initiation (e.g. a download link is clicked) to the point in which the response is received (file downloads), unlike jitter which refers to the variation in time between packet delivery.

Factors Influencing Latency

There are several factors influencing network latency.

Factors Causing Latency

| wdt_ID | wdt_created_by | wdt_created_at | wdt_last_edited_by | wdt_last_edited_at | Factor | Description |

|---|---|---|---|---|---|---|

| 1 | hyelland | 04/11/2024 10:53 AM | hyelland | 04/11/2024 10:53 AM | Propagation Delays | The physical distance between the source and destination heavily affects data transmission as communications that have to travel further take longer. |

| 2 | hyelland | 04/11/2024 10:53 AM | hyelland | 04/11/2024 10:53 AM | Transmission Medium | The transmission medium determines the speed at which data can be transported. Fibre Optic provides the fastest speeds as data is transmitted up to near the speed of light. Copper cables provide a hard-wired but slower option and wireless provides the slo |

| 3 | hyelland | 04/11/2024 10:53 AM | hyelland | 04/11/2024 10:53 AM | Server Response | The efficiency of the server to serve a response after receiving a request affects network latency due to latency being measured as a round trip. The longer the server takes to respond to the request, the more latency is experienced. |

| 4 | hyelland | 04/11/2024 10:53 AM | hyelland | 04/11/2024 10:53 AM | Network Hardware | Network hardware such as routers and switches handle data routing and therefore processing speeds for the handling process affect the latency, especially where many appliances are used. |

| Factor | Description |

Technological Advances Reducing Latency

Technology advances have reduced network latency. The adoption of widespread, affordable fibre optic cabling has enabled high-speed communications, reducing network latency where previously slower copper cabling was installed.

The innovation of 5G cellular telecommunications serves as a significant improvement when compared to the previous 4G standard, with 5G being reported as potentially up to 10 times faster due to using smaller wavelengths with much higher frequencies.

Dedicated communication links also provide a reduction in latency. By reducing the load on specific routes, communications for essential applications can be improved, reducing latency for the most important applications for organisations.

Innovations in Network Configuration

Software Defined Networking (SDN) and Network Functionality Virtualisation (NFV) provide dynamic and flexible network management, allowing optimisations for data flows, minimising latency.

Software Defined Wide Area Networking (SD-WAN) solutions also offer routing optimisation through Quality of Service (QoS) policies. This means that organisations can prioritise specific applications and data, reducing latency for business-critical applications.

The Role of Artificial Intelligence

Artificial Intelligence (AI) has been integrated into network management in order to offer several benefits for latency.

AI can use predictive analysis where network telemetry is analysed and patterns are matched against historical data in order to mitigate potential network issues before they have occurred. Machine Learning can be used to dynamically optimise network routing and responses in order to minimise latency and improve networking efficiencies.

Product Solutions for Improved Latency

There are many products designed for improving latency.

One of these products is advanced routers and switches. These implement improved software management compared to more traditional routers, enabling more efficient routing and Quality of Service (QoS) policies to be introduced. Advanced routers often also offer higher-speed interfaces, reducing the time taken for data to travel through the router.

Cloud-Based Network Management Solutions leverage both Artificial Intelligence and Machine Learning for network routing optimisations, reducing latency by choosing the optimal network path.

Content Delivery Networks (CDNs) are being increasingly used to cache content closer to the user’s geographical location. This reduces the distance data has to travel from source to destination, reducing propagation delays.

The Impact of Latency on User Experience

The minimisation of latency is essential for optimal User Experience. This is especially true for critical business applications, such as real time communications, Voice over Internet Protocol (VoIP), Remote Desktop Connections (RDC) and cloud-based applications (e.g. Office 365). These applications are very latency-sensitive and thus minimising latency for these data flows ensures seamless reliable performance from the applications and therefore improves the overall user experience. Additionally, applications such as video streaming and online gaming is very resource intensive and therefore any increase in latency can be incredibly noticeable, which can cause the user experience to decrease.

Future Trends in Reducing Latency

One future theory for reducing latency is Quantum Networking. This would rely on the core principles of Quantum Entanglement, where states could be instantaneously teleported across large geographical distances without the need for physical mediums. This would potentially minimise network latency by cutting out the transport time.

A trend for reducing latency is the improvement of networking protocols. Networking protocols define how data traffic is handled and transmitted across the network and therefore improvements to protocols could lead to more efficient data handling, resulting in lower latency.

FAQs on Network Latency

- What is considered good latency?

For applications such as Voice over Internet Protocol (VoIP), optimal latency is less than 150 milliseconds. Performance does degrade past this and becomes significantly noticeable past 250 milliseconds and unusable past 400ms.

- Difference between latency, bandwidth, and throughput?

Latency is the delay before data begins to transfer, bandwidth is the volume of data that can be transferred at once, and throughput is the actual rate of successful data transfer.

Conclusion

Latency is the measure of time for a full request and response round-trip within a network. Network latency affects the efficiency and responsiveness of applications, with performance experiencing degradation as latency increases.

To minimise latency, technological advancements such as Software-Defined Networking, improved cellular communications and fibre optics are being increasingly used, with quantum networking and improved networking protocols being touted as future innovations that could further improve latency issues.

As understanding how to manage latency is crucial for user experience, organisations should be aware of what is considered good latency and the differences between latency, bandwidth and throughput so that they can better manage Quality of Service policies when prioritising essential applications.